AEye: A Visualization Tool for Image Datasets

Florian Grötschla - ETH Zurich, Zurich, Switzerland

Luca A Lanzendörfer - ETH Zurich, Zurich, Switzerland

Marco Calzavara - ETH Zurich, Zurich, Switzerland

Roger Wattenhofer - ETH Zurich, Zurich, Switzerland

Screen-reader Accessible PDF

Download preprint PDF

Download Supplemental Material

Room: Bayshore VI

2024-10-17T15:09:00ZGMT-0600Change your timezone on the schedule page

2024-10-17T15:09:00Z

Fast forward

Full Video

Keywords

Image embeddings, image visualization, contrastive learning, semantic search.

Abstract

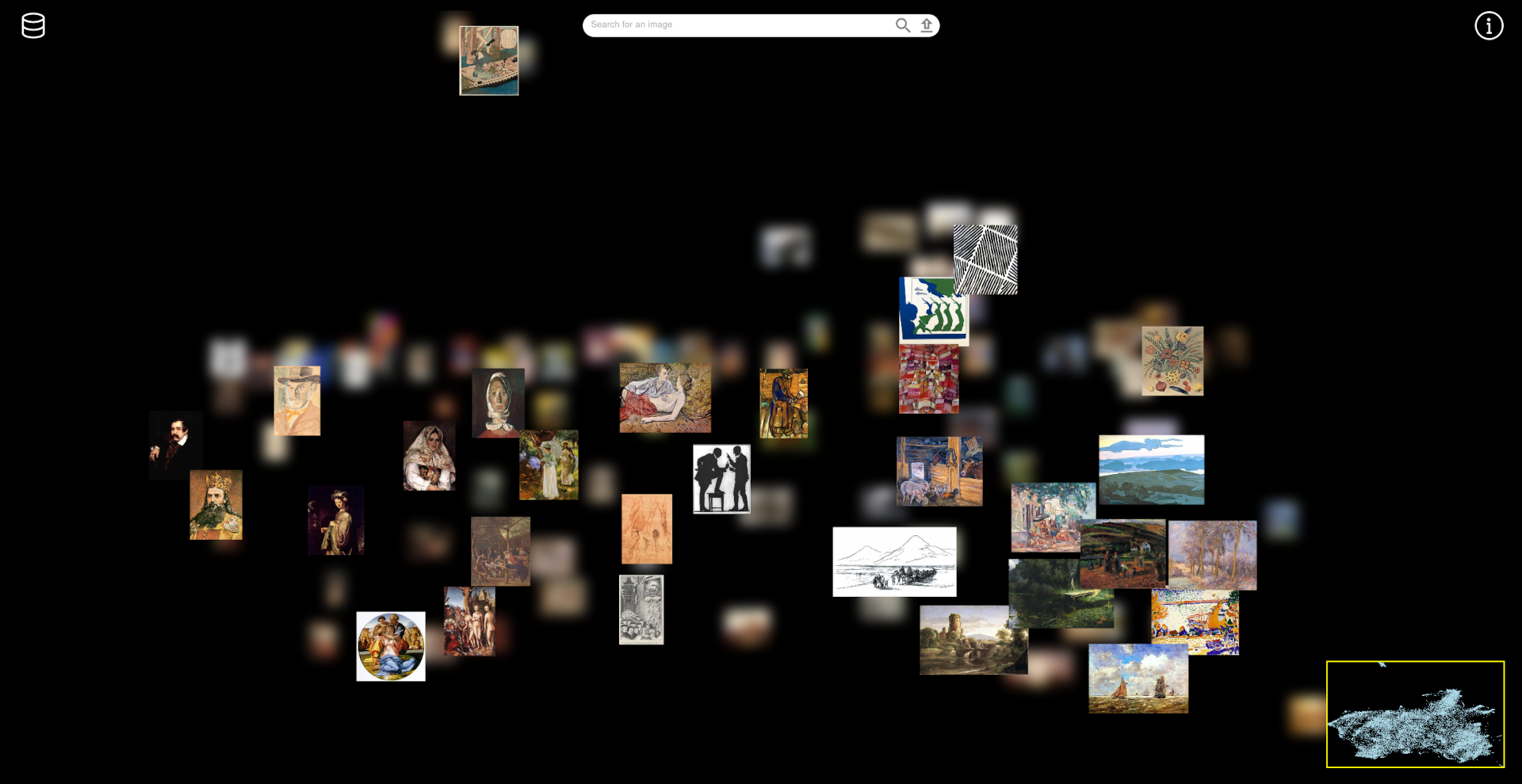

Image datasets serve as the foundation for machine learning models in computer vision, significantly influencing model capabilities, performance, and biases alongside architectural considerations. Therefore, understanding the composition and distribution of these datasets has become increasingly crucial. To address the need for intuitive exploration of these datasets, we propose AEye, an extensible and scalable visualization tool tailored to image datasets. AEye utilizes a contrastively trained model to embed images into semantically meaningful high-dimensional representations, facilitating data clustering and organization. To visualize the high-dimensional representations, we project them onto a two-dimensional plane and arrange images in layers so users can seamlessly navigate and explore them interactively. AEye facilitates semantic search functionalities for both text and image queries, enabling users to search for content. We open-source the codebase for AEye, and provide a simple configuration to add datasets.