Active Appearance and Spatial Variation Can Improve Visibility in Area Labels for Augmented Reality

Hojung Kwon - Brown University, Providence, United States

Yuanbo Li - Brown University, Providence, United States

Xiaohan Ye - Brown University, Providence, United States

Praccho Muna-McQuay - Brown University, Providence, United States

Liuren Yin - Duke University, Durham, United States

James Tompkin - Brown University, Providence, United States

Room: Bayshore VI

2024-10-16T17:03:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T17:03:00Z

Fast forward

Full Video

Keywords

Augmented reality, active labels, environment-adaptive

Abstract

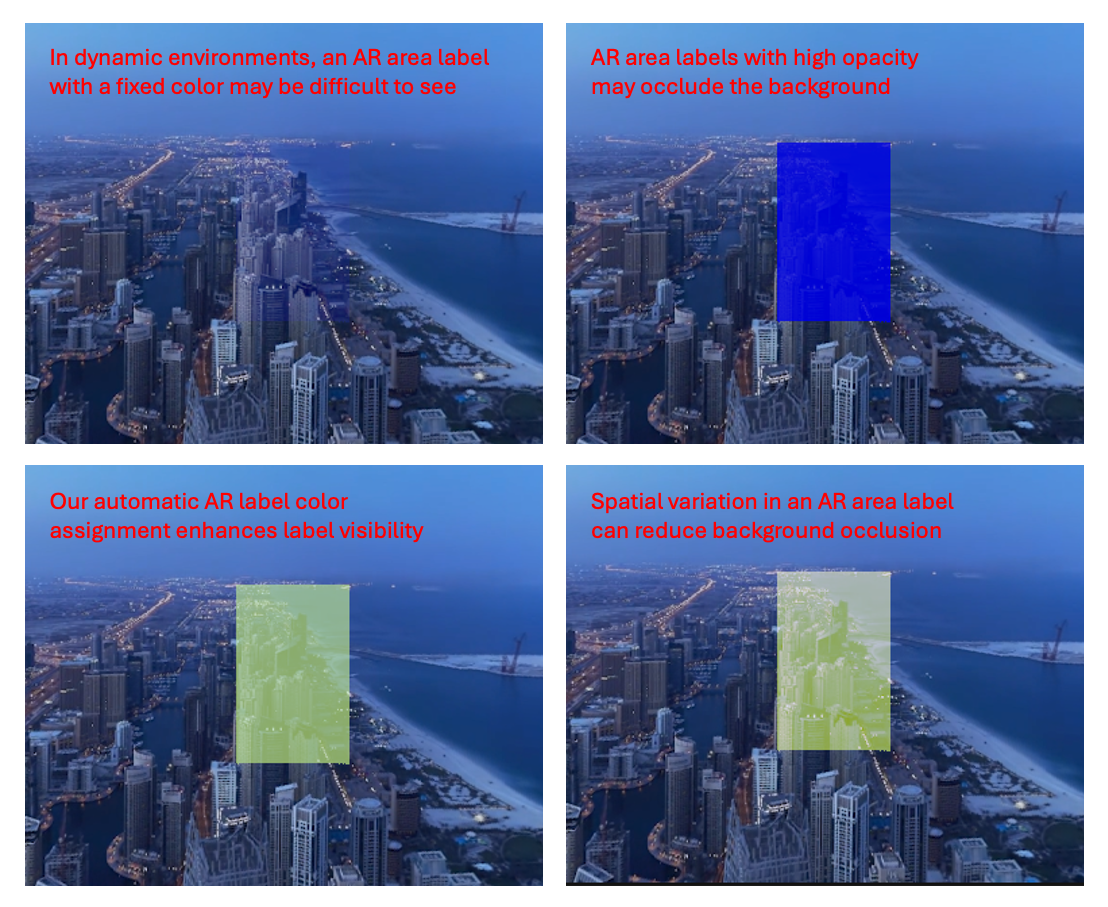

Augmented reality (AR) area labels can visualize real world regions with arbitrary boundaries and show invisible objects or features. But environment conditions such as lighting and clutter can decrease fixed or passive label visibility, and labels that have high opacity levels can occlude crucial details in the environment. We design and evaluate active AR area label visualization modes to enhance visibility across real-life environments, while still retaining environment details within the label. For this, we define a distant characteristic color from the environment in perceptual CIELAB space, then introduce spatial variations among label pixel colors based on the underlying environment variation. In a user study with 18 participants, we found that our active label visualization modes can be comparable in visibility to a fixed green baseline by Gabbard et al., and can outperform it with added spatial variation in cluttered environments, across varying levels of lighting (e.g., nighttime), and in environments with colors similar to the fixed baseline color.