Evaluating the Semantic Profiling Abilities of LLMs for Natural Language Utterances in Data Visualization

Hannah K. Bako - University of Maryland, College Park, United States

Arshnoor Bhutani - University of Maryland, College Park, United States

Xinyi Liu - The University of Texas at Austin, Austin, United States

Kwesi Adu Cobbina - University of Maryland, College Park, United States

Zhicheng Liu - University of Maryland, College Park, United States

Screen-reader Accessible PDF

Download preprint PDF

Download Supplemental Material

Room: Bayshore VI

2024-10-17T14:33:00ZGMT-0600Change your timezone on the schedule page

2024-10-17T14:33:00Z

Fast forward

Full Video

Keywords

Human-centered computing—Visualization—Empirical studies in visualization;

Abstract

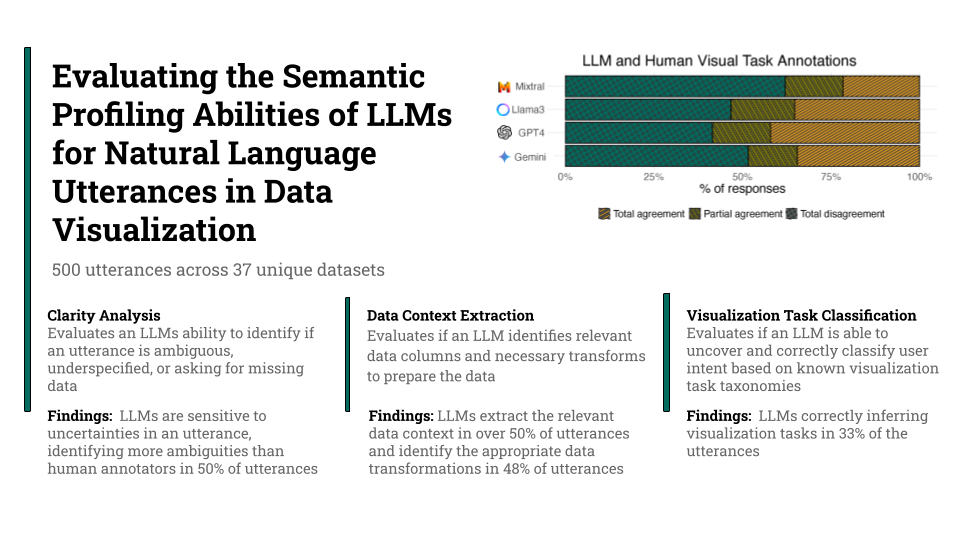

Automatically generating data visualizations in response to human utterances on datasets necessitates a deep semantic understanding of the utterance, including implicit and explicit references to data attributes, visualization tasks, and necessary data preparation steps. Natural Language Interfaces (NLIs) for data visualization have explored ways to infer such information, yet challenges persist due to inherent uncertainty in human speech. Recent advances in Large Language Models (LLMs) provide an avenue to address these challenges, but their ability to extract the relevant semantic information remains unexplored. In this study, we evaluate four publicly available LLMs (GPT-4, Gemini-Pro, Llama3, and Mixtral), investigating their ability to comprehend utterances even in the presence of uncertainty and identify the relevant data context and visual tasks. Our findings reveal that LLMs are sensitive to uncertainties in utterances. Despite this sensitivity, they are able to extract the relevant data context. However, LLMs struggle with inferring visualization tasks. Based on these results, we highlight future research directions on using LLMs for visualization generation. Our supplementary materials have been shared on GitHub: https://github.com/hdi-umd/Semantic_Profiling_LLM_Evaluation.